- R Notebook Cheat Sheet 2020

- R Notebook Cheat Sheet

- R Notebook Cheat Sheet Download

- R Notebook Cheat Sheet Grade

- R Notebook Cheat Sheet Free

- Notebook Sheet Pdf

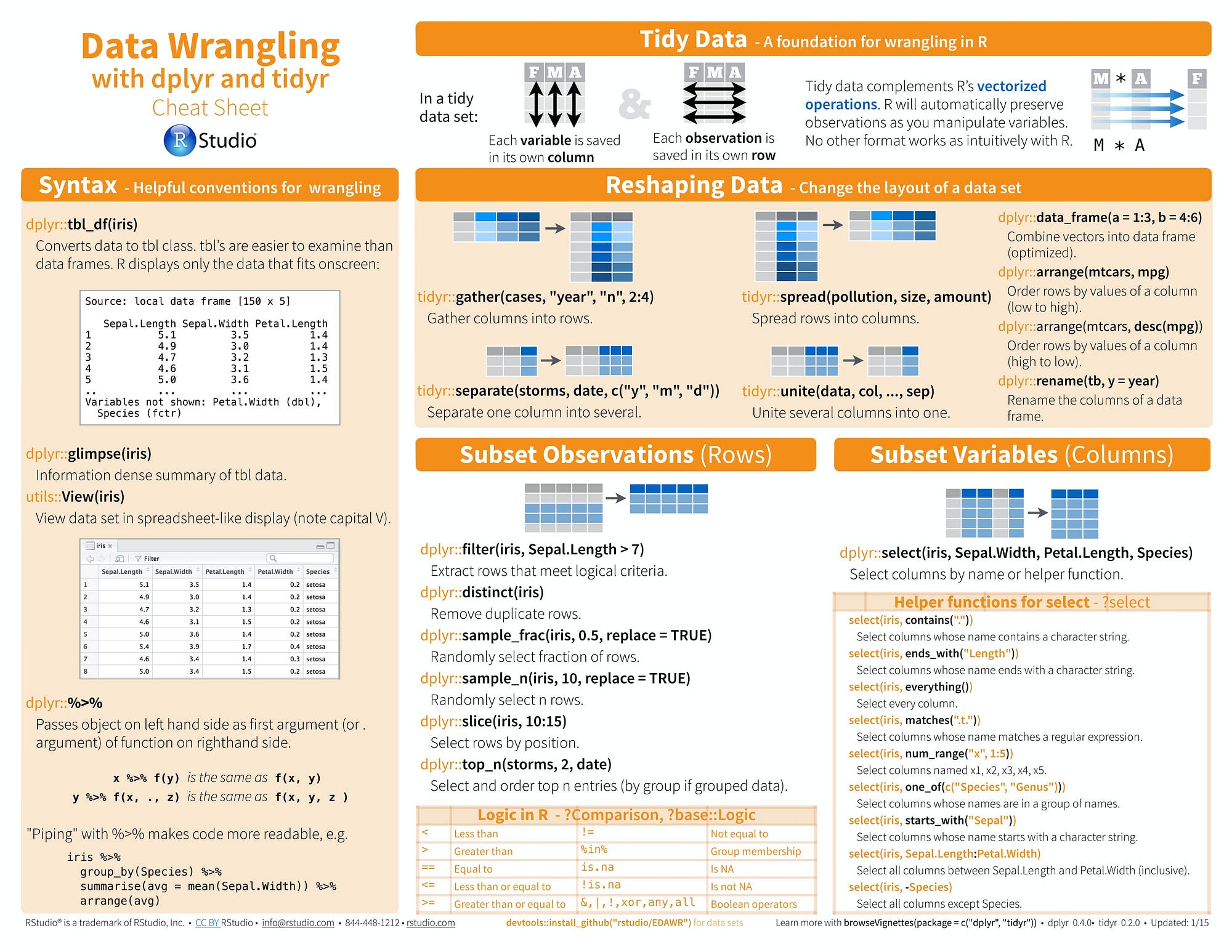

Cheat Sheet Published 21st March, 2016. Updated 21st March, 2016. Sponsor Measure your website readability! Jupyter Notebook Cheat Sheet Created Date. Help yourself to these free books, tutorials, packages, cheat sheets, and many more materials for R programming. There’s a separate overview for neat little R programming tricks. If you have additions, please comment below or here. Introductory R Cheat Sheets. Base R cheat sheet by Mhairi McNeill. Base R functions cheat sheet.

A notebook is a collection of runnable cells (commands). When you use a notebook, you are primarily developing and running cells.

All notebook tasks are supported by UI actions, but you can also perform many tasks using keyboard shortcuts. Toggle the shortcut display by clicking the icon or selecting ? > Shortcuts.

Develop notebooks

This section describes how to develop notebook cells and navigate around a notebook.

In this section:

A notebook has a toolbar that lets you manage the notebook and perform actions within the notebook:

and one or more cells (or commands) that you can run:

At the far right of a cell, the cell actions , contains three menus: Run, Dashboard, and Edit:

— —

and two actions: Hide and Delete .

To add a cell, mouse over a cell at the top or bottom and click the icon, or access the notebook cell menu at the far right, click , and select Add Cell Above or Add Cell Below.

Go to the cell actions menu at the far right and click (Delete).

When you delete a cell, by default a delete confirmation dialog displays. To disable future confirmation dialogs, select the Do not show this again checkbox and click Confirm. You can also toggle the confirmation dialog setting with the Turn on command delete confirmation option in > User Settings > Notebook Settings.

To restore deleted cells, either select Edit > Undo Delete Cells or use the (Z) keyboard shortcut.

Go to the cell actions menu at the far right, click , and select Cut Cell.

You can also use the (X) keyboard shortcut.

To restore deleted cells, either select Edit > Undo Cut Cells or use the (Z) keyboard shortcut.

You can select adjacent notebook cells using Shift + Up or Down for the previous and next cell respectively. Multi-selected cells can be copied, cut, deleted, and pasted.

To select all cells, select Edit > Select All Cells or use the command mode shortcut Cmd+A.

The default language for each cell is shown in a (<language>) link next to the notebook name. In the following notebook, the default language is SQL.

To change the default language:

Click (<language>) link. The Change Default Language dialog displays.

Select the new language from the Default Language drop-down.

Click Change.

To ensure that existing commands continue to work, commands of the previous default language are automatically prefixed with a language magic command.

You can override the default language by specifying the language magic command %<language> at the beginning of a cell. The supported magic commands are: %python, %r, %scala, and %sql.

Note

When you invoke a language magic command, the command is dispatched to the REPL in the execution context for the notebook. Variables defined in one language (and hence in the REPL for that language) are not available in the REPL of another language. REPLs can share state only through external resources such as files in DBFS or objects in object storage.

Notebooks also support a few auxiliary magic commands:

R Notebook Cheat Sheet 2020

%sh: Allows you to run shell code in your notebook. To fail the cell if the shell command has a non-zero exit status, add the-eoption. This command runs only on the Apache Spark driver, and not the workers. To run a shell command on all nodes, use an init script.%fs: Allows you to usedbutilsfilesystem commands. See Databricks CLI.%md: Allows you to include various types of documentation, including text, images, and mathematical formulas and equations. See the next section.

To include documentation in a notebook you can use the %md magic command to identify Markdown markup. The included Markdown markup is rendered into HTML. For example, this Markdown snippet contains markup for a level-one heading:

It is rendered as a HTML title:

Collapsible headings

Cells that appear after cells containing Markdown headings can be collapsed into the heading cell. The following image shows a level-one heading called Heading 1 with the following two cells collapsed into it.

To expand and collapse headings, click the + and -.

Also see Hide and show cell content.

Link to other notebooks

You can link to other notebooks or folders in Markdown cells using relative paths. Specify the hrefattribute of an anchor tag as the relative path, starting with a $ and then follow the samepattern as in Unix file systems:

Display images

To display images stored in the FileStore, use the syntax:

For example, suppose you have the Databricks logo image file in FileStore:

When you include the following code in a Markdown cell:

the image is rendered in the cell:

Display mathematical equations

Notebooks support KaTeX for displaying mathematical formulas and equations. For example,

renders as:

and

renders as:

Include HTML

You can include HTML in a notebook by using the function displayHTML. See HTML, D3, and SVG in notebooks for an example of how to do this.

Note

The displayHTML iframe is served from the domain databricksusercontent.com and the iframe sandbox includes the allow-same-origin attribute. databricksusercontent.com must be accessible from your browser. If it is currently blocked by your corporate network, it must added to an allow list.

You can have discussions with collaborators using command comments.

To toggle the Comments sidebar, click the Comments button at the top right of a notebook.

To add a comment to a command:

Highlight the command text and click the comment bubble:

Add your comment and click Comment.

To edit, delete, or reply to a comment, click the comment and choose an action.

There are three display options for notebooks:

- Standard view: results are displayed immediately after code cells

- Results only: only results are displayed

- Side-by-side: code and results cells are displayed side by side, with results to the right

Go to the View menu to select your display option.

To show line numbers or command numbers, go to the View menu and select Show line numbers or Show command numbers. Once they’re displayed, you can hide them again from the same menu. You can also enable line numbers with the keyboard shortcut Control+L.

If you enable line or command numbers, Databricks saves your preference and shows them in all of your other notebooks for that browser.

Command numbers above cells link to that specific command. If you click the command number for a cell, it updates your URL to be anchored to that command. If you want to link to a specific command in your notebook, right-click the command number and choose copy link address.

To find and replace text within a notebook, select Edit > Find and Replace. The current match is highlighted in orange and all other matches are highlighted in yellow.

To replace the current match, click Replace. To replace all matches in the notebook, click Replace All.

To move between matches, click the Prev and Next buttons. You can also pressshift+enter and enter to go to the previous and next matches, respectively.

To close the find and replace tool, click or press esc.

You can use Databricks autocomplete to automatically complete code segments as you type them. Databricks supports two types of autocomplete: local and server.

Local autocomplete completes words that are defined in the notebook. Server autocomplete accesses the cluster for defined types, classes, and objects, as well as SQL database and table names. To activate server autocomplete, attach your notebook to a cluster and run all cells that define completable objects.

Important

Server autocomplete in R notebooks is blocked during command execution.

To trigger autocomplete, press Tab after entering a completable object. For example, after you define and run the cells containing the definitions of MyClass and instance, the methods of instance are completable, and a list of valid completions displays when you press Tab.

Type completion and SQL database and table name completion work in the same way.

— —

In Databricks Runtime 7.4 and above, you can display Python docstring hints by pressing Shift+Tab after entering a completable Python object. The docstrings contain the same information as the help() function for an object.

Databricks provides tools that allow you to format SQL code in notebook cells quickly and easily. These tools reduce the effort to keep your code formatted and help to enforce the same coding standards across your notebooks.

You can trigger the formatter in the following ways:

Single cells

Keyboard shortcut: Press Cmd+Shift+F.

Command context menu: Select Format SQL in the command context drop-down menu of a SQL cell. This item is visible only in SQL notebook cells and those with a

%sqllanguage magic.

Multiple cells

Select multiple SQL cells and then select Edit > Format SQL Cells. If you select cells of more than one language, only SQL cells are formatted. This includes those that use

%sql.

Here’s the first cell in the preceding example after formatting:

To display an automatically generated table of contents, click the arrow at the upper left of the notebook (between the sidebar and the topmost cell). The table of contents is generated from the Markdown headings used in the notebook.

To close the table of contents, click the left-facing arrow.

You can choose to display notebooks in dark mode. To turn dark mode on or off, select View > Notebook Theme and select Light Theme or Dark Theme.

Run notebooks

This section describes how to run one or more notebook cells.

In this section:

The notebook must be attached to a cluster. If the cluster is not running, the cluster is started when you run one or more cells.

In the cell actions menu at the far right, click and select Run Cell, or press shift+enter.

Important

The maximum size for a notebook cell, both contents and output, is 16MB.

For example, try running this Python code snippet that references the predefined sparkvariable.

and then, run some real code:

Note

Notebooks have a number of default settings:

- When you run a cell, the notebook automatically attaches to a running cluster without prompting.

- When you press shift+enter, the notebook auto-scrolls to the next cell if the cell is not visible.

To change these settings, select > User Settings > Notebook Settings and configure the respective checkboxes.

To run all cells before or after a cell, go to the cell actions menu at the far right, click , and select Run All Above or Run All Below.

Run All Below includes the cell you are in. Run All Above does not.

To run all the cells in a notebook, select Run All in the notebook toolbar.

Important

Do not do a Run All if steps for mount and unmount are in the same notebook. It could lead to a race condition and possibly corrupt the mount points.

Python notebooks and %python cells in non-Python notebooks support multiple outputs per cell.

This feature requires Databricks Runtime 7.1 or above and can be enabled in Databricks Runtime 7.1-7.3 by setting spark.databricks.workspace.multipleResults.enabledtrue.It is enabled by default in Databricks Runtime 7.4 and above.

Python and Scala notebooks support error highlighting. That is, the line of code thatis throwing the error will be highlighted in the cell. Additionally, if the error output is a stacktrace,the cell in which the error is thrown is displayed in the stacktrace as a link to the cell. You can click this link to jump to the offending code.

Notifications alert you to certain events, such as which command is currently running during Run all cells and which commands are in error state. When your notebook is showing multiple error notifications, the first one will have a link that allows you to clear all notifications.

Notebook notifications are enabled by default. You can disable them under > User Settings > Notebook Settings.

Databricks Advisor automatically analyzes commands every time they are run and displays appropriate advice in the notebooks. The advice notices provide information that can assist you in improving the performance of workloads, reducing costs, and avoiding common mistakes.

View advice

A blue box with a lightbulb icon signals that advice is available for a command. The box displays the number of distinct pieces of advice.

Click the lightbulb to expand the box and view the advice. One or more pieces of advice will become visible.

Click the Learn more link to view documentation providing more information related to the advice.

Click the Don’t show me this again link to hide the piece of advice. The advice of this type will no longer be displayed. This action can be reversed in Notebook Settings.

Click the lightbulb again to collapse the advice box.

Advice settings

Access the Notebook Settings page by selecting > User Settings > Notebook Settings or by clicking the gear icon in the expanded advice box.

Toggle the Turn on Databricks Advisor option to enable or disable advice.

The Reset hidden advice link is displayed if one or more types of advice is currently hidden. Click the link to make that advice type visible again.

You can run a notebook from another notebook by using the %run<notebook> magic command. This is roughly equivalent to a :load command in a Scala REPL on your local machine or an import statement in Python. All variables defined in <notebook> become available in your current notebook.

%run must be in a cell by itself, because it runs the entire notebook inline.

Note

You cannot use %run to run a Python file and import the entities defined in that file into a notebook. To import from a Python file you must package the file into a Python library, create a Databricks library from that Python library, and install the library into the cluster you use to run your notebook.

Example

Suppose you have notebookA and notebookB. notebookA contains a cell that has the following Python code:

Even though you did not define x in notebookB, you can access x in notebookB after you run %runnotebookA.

To specify a relative path, preface it with ./ or ../. For example, if notebookA and notebookB are in the same directory, you can alternatively run them from a relative path.

R Notebook Cheat Sheet

For more complex interactions between notebooks, see Notebook workflows.

Manage notebook state and results

After you attach a notebook to a cluster and run one or more cells, your notebook has state and displays results. This section describes how to manage notebook state and results.

In this section:

To clear the notebook state and results, click Clear in the notebook toolbar and select the action:

By default downloading results is enabled. To toggle this setting, see Manage the ability to download results from notebooks. If downloading results is disabled, the button is not visible.

You can download a cell result that contains tabular output to your local machine. Click the button at the bottom of a cell.

A CSV file named export.csv is downloaded to your default download directory.

Download full results

R Notebook Cheat Sheet Download

By default Databricks returns 1000 rows of a DataFrame. When there are more than 1000 rows, a down arrow is added to the button. To download all the results of a query:

Click the down arrow next to and select Download full results.

Select Re-execute and download.

After you download full results, a CSV file named

export.csvis downloaded to your local machine and the/databricks-resultsfolder has a generated folder containing full the query results.

Cell content consists of cell code and the result of running the cell. You can hide and show the cell code and result using the cell actions menu at the top right of the cell.

To hide cell code:

- Click and select Hide Code

To hide and show the cell result, do any of the following:

- Click and select Hide Result

- Select

- Type Esc > Shift + o

To show hidden cell code or results, click the Show links:

See also Collapsible headings.

Notebook isolation refers to the visibility of variables and classes between notebooks. Databricks supports two types of isolation:

- Variable and class isolation

- Spark session isolation

Note

Since all notebooks attached to the same cluster execute on the same cluster VMs, even with Spark session isolation enabled there is no guaranteed user isolation within a cluster.

Variable and class isolation

Variables and classes are available only in the current notebook. For example, two notebooks attached to the same cluster can define variables and classes with the same name, but these objects are distinct.

To define a class that is visible to all notebooks attached to the same cluster, define the class in a package cell. Then you can access the class by using its fully qualified name, which is the same as accessing a class in an attached Scala or Java library.

Spark session isolation

Every notebook attached to a cluster running Apache Spark 2.0.0 and above has a pre-defined variable called spark that represents a SparkSession. SparkSession is the entry point for using Spark APIs as well as setting runtime configurations.

Spark session isolation is enabled by default. You can also use global temporary views to share temporary views across notebooks. See Create View or CREATE VIEW. To disable Spark session isolation, set spark.databricks.session.share to true in the Spark configuration.

Important

Setting spark.databricks.session.share true breaks the monitoring used by both streaming notebook cells and streaming jobs. Specifically:

- The graphs in streaming cells are not displayed.

- Jobs do not block as long as a stream is running (they just finish “successfully”, stopping the stream).

- Streams in jobs are not monitored for termination. Instead you must manually call

awaitTermination(). - Calling the display function on streaming DataFrames doesn’t work.

Cells that trigger commands in other languages (that is, cells using %scala, %python, %r, and %sql) and cells that include other notebooks (that is, cells using %run) are part of the current notebook. Thus, these cells are in the same session as other notebook cells. By contrast, a notebook workflow runs a notebook with an isolated SparkSession, which means temporary views defined in such a notebook are not visible in other notebooks.

Version control

Databricks has basic version control for notebooks. You can perform the following actions on revisions: add comments, restore and delete revisions, and clear revision history.

To access notebook revisions, click Revision History at the top right of the notebook toolbar.

In this section:

To add a comment to the latest revision:

Click the revision.

Click the Save now link.

In the Save Notebook Revision dialog, enter a comment.

Click Save. The notebook revision is saved with the entered comment.

To restore a revision:

Click the revision.

Click Restore this revision.

Click Confirm. The selected revision becomes the latest revision of the notebook.

To delete a notebook’s revision entry:

Click the revision.

Click the trash icon .

Click Yes, erase. The selected revision is deleted from the notebook’s revision history.

To clear a notebook’s revision history:

Select File > Clear Revision History.

Click Yes, clear. The notebook revision history is cleared.

Warning

Once cleared, the revision history is not recoverable.

Note

To sync your work in Databricks with a remote Git repository, Databricks recommends using Repos for Git integration.

Databricks also integrates with these Git-based version control tools:

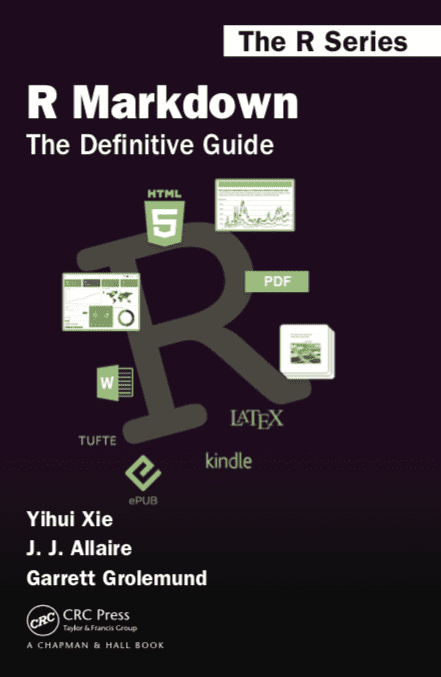

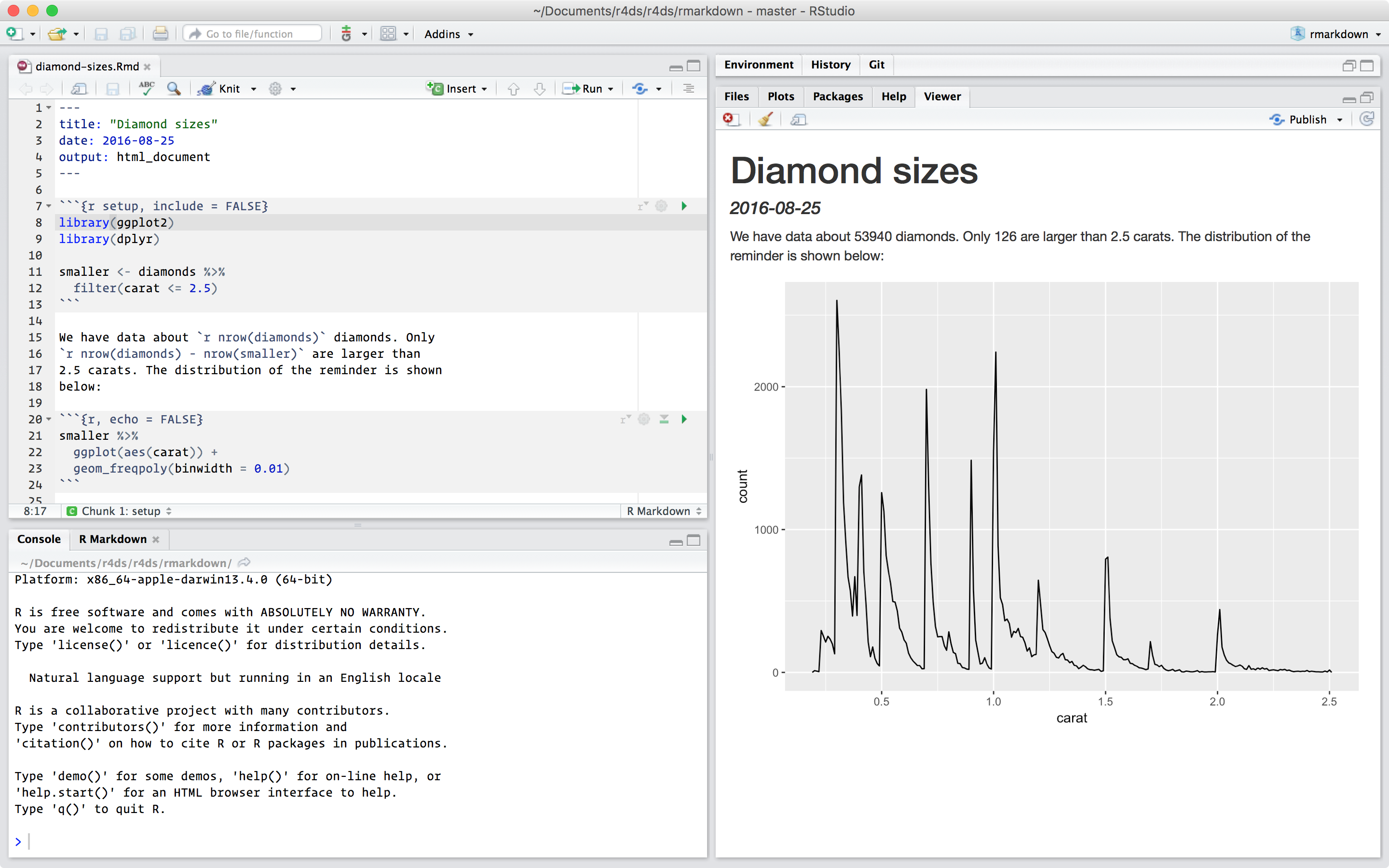

You can use Python with RStudio professional products to develop and publish interactive applications with Shiny, Dash, Streamlit, or Bokeh; reports with R Markdown or Jupyter Notebooks; and REST APIs with Plumber or Flask.

For an overview of how RStudio helps support Data Science teams using R & Python together, see R & Python: A Love Story.

For more information on administrator workflows for configuring RStudio with Python and Jupyter, refer to the resources on configuring Python with RStudio.

Developing with Python#

Data scientists and analysts can:

- Work with the RStudio IDE, Jupyter Notebook, JupyterLab, or VS Code editors from RStudio Server Pro

Want to learn more about RStudio Server Pro and Python?#

For more information on integrating RStudio Server Pro with Python, refer to the resources on configuring Python with RStudio.

Publishing Python Content#

Data scientists and analysts can publish Python content to RStudio Connect by:

- Publishing Jupyter Notebooks that can be scheduled and emailed as reports

- Publishing Flask applications and APIs

- Publishing Dash applications

- Publishing Streamlit applications

- Publishing Bokeh applications

Ready to publish Jupyter Notebooks to RStudio Connect?#

View the user documentation for publishing Jupyter Notebooks to RStudio Connect

R Notebook Cheat Sheet Grade

Ready to share interactive Python content on RStudio Connect?#

Learn more about publishing dash or flask applications and APIs.

View example code as well as samples in the user guide.

Publishing Python and R Content#

Data scientists and analysts can publish mixed Python and R content to RStudio Connect by publishing:

- Shiny applications that call Python scripts

- R Markdown reports that call Python scripts

- Plumber APIs that call Python scripts

Mixed content relies on the reticulate package, which you can read more about on the project's website.

View the user documentation for publishing content that uses Python and R to RStudio Connect

Cheat sheet for using Python with R and reticulate

Managing Python Packages#

RStudio Package Manager supports both R and Python packages. Visit this guide to learn more about how you can securely mirror PyPI.

Additional Resources#

Want to learn more about RStudio Connect and Python?#

R Notebook Cheat Sheet Free

Frequently asked questions for using Python with RStudio Connect

Notebook Sheet Pdf

Learn about best practices for using Python with RStudio Connect

Want to see examples of using Python with RStudio?#

View code examples on GitHub of Using Python with RStudio

View examples of Flask APIs published to RStudio Connect