See full list on promptcloud.com. Excel web query is an excellent way to automate the routine task of accessing a web page and copying the data on an Excel sheet. If you use web query, you can instruct Excel where to look (web page) and what to copy (tables of data). What this will accomplish is that Excel will automatically import the data onto a worksheet for you. Python excel web-scraping. Improve this question. Follow asked Feb 19 '18 at 22:56. User3642695 user3642695. 13 1 1 silver badge 6 6 bronze badges. Jan 20, 2021 The scraper is another easy-to-use screen web scraper that can easily extract data from an online table, and upload the result to Google Docs. Just select some text in a table or a list, right-click on the selected text and choose 'Scrape Similar' from the browser menu. DataMiner Scraper is a data extraction tool that lets you scrape any HTML web page. You can extract tables and lists from any page and upload them to Google Sheets or Microsoft Excel.

Good old HTML tables.

At some point, most websites were fully made via HTML tables.

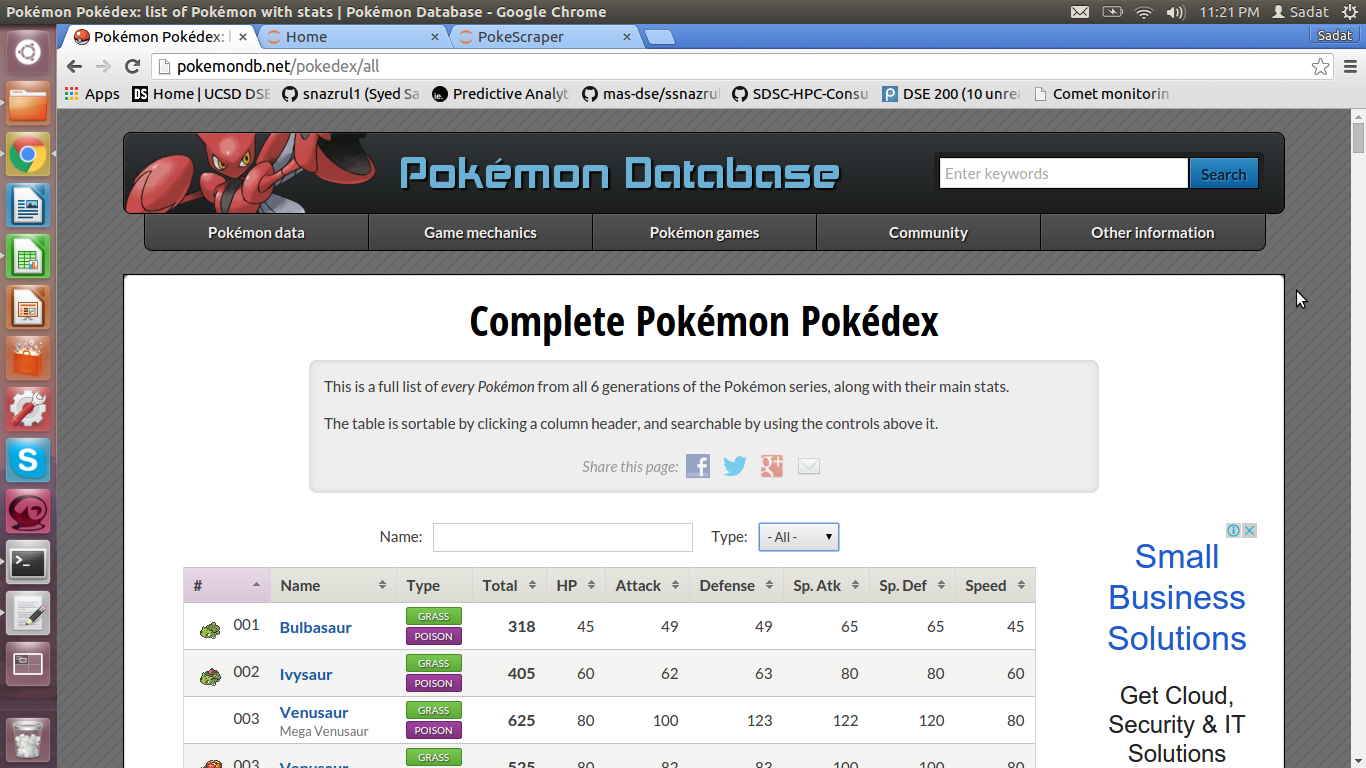

Nowadays though, you might be interested in scraping data from an HTML table on to an excel spreadsheet or JSON file.

A web scraper can help you automate this task as copy/pasting usually messes up the formatting of your data.

Web Scraping HTML Tables

For this example, we will use ParseHub, a free and powerful web scraper to scrape data from tables.

We will also scrape data from Wikipedia for this example. We will scrape data on Premier League scores from the 1992-1993 season.

How to Scrape HTML Tables into Excel

Now it’s time to get scraping.

- Make sure to download ParseHub and boot it up. Inside the app, click on Start New Project and submit the URL you will scrape. ParseHub will now render the page.

- First, we will scroll down all the way to the League Table section of the article and we will click on the first team name on the list. It will be highlighted in green to indicate it has been selected.

- The rest of the team names will be highlighted in yellow, click on the second one on the list to select them all.

- On the left sidebar, rename your selection to team. Since we just want the data on the table, we will expand the selection and remove the URL extraction since we are not interested in this.

- Now we will start extracting the rest of the data on the table. To do this, start by clicking on the PLUS(+) sign next to your team selection and choose the Relative Select command.

- Using this command, click on the name of the first team on the list and then on the number beside it. An arrow will appear to show the association you’re creating.

- Rename your Relative Select command to played. Now, repeat steps 5-7 to pull each column of data on the table.

Once you’ve added every column, ParseHub can now scrape the entire table into an Excel sheet.

Running your scrape

To run your scrape job, click on the green Get Data button on the left sidebar.

Here, you will be able to run your scrape job, test it or schedule it for later.

For longer scrape jobs, we recommend testing your scrape jobs to guarantee they are working correctly.

In this case, we will run it right away. Now ParseHub will go off and collect all the data we selected.

Once your scrape is complete, you will be able to download the data as an Excel or JSON file.

Closing Thoughts

You now know how to scrape HTML tables from any website.

However, we know that not every website is built the same. If you run into any issues while setting up your project, reach out to us via email or chat and we’ll be happy to assist.

Interested in scraping more than one page worth of tables? Check out our guide on scraping Wikipedia where we do just that!

Happy Scraping!

If you’re a fantasy nerd like me, having access to sports stats can be extremely useful in giving you a leg up on your competition!

By sorting players by certain stats, you can find hidden gems for bargain prices, especially in later rounds of the draft. Think Moneyball for fantasy sports.

In this tutorial, we will demonstrate how to use ParseHub to extract stats on every quarterback in the NFL from the NFL website.

In this tutorial, we will show you how to:

- Scrape data from a sports stats websites.

- Import the data into a Google Doc - for reference and to share with friends

Step 1: Use a web scraper to scrape data from a sports database

1. Extract the players’ names

- Download ParseHub for Free and start up the desktop app

- Go to the landing page for NFL quarterback stats.

- Using the Select tool, select the first player name on the list by clicking on it.

- Click on another player name and they will all be selected and extracted for you.

- Rename this selection to player.

Now for the fun part. For every player name that you’ve extracted, you want to create a relative selection to their stats, and extract them as well. Say, for example, you want to know the team that the QB is on, their number of completed and attempted passes, total yards, touchdowns, and interceptions thrown.

You can collect all of that data by following the steps below.

2. Collect the team names

- Click on the “plus' button next to the 'Begin new entry in player' command.

- Add the relative select command to create a link between the player name, and their team name. Click on the player name and click on the team name. All of the team names should be selected for you - with an arrow pointing from the player names to the team names on the entire page.

- Name this selection team. The name and URL will be extracted for you.

Now you can just repeat steps 1 to 5 in the 'Collect the team names' section for each stat that you want. I will show you how to do one more, and grab the interception stats for each QB.

- Click on the “plus' button next to the 'Begin new entry in player' command and add the relative select command again.

- Click on the player name and click on the number of interceptions that they have. Now all of them will be selected.

- Name this selection int.

Here’s a sample of the data that we collected by repeating the above steps for team, interceptions, yards, attempts, completions, and touchdowns.

This selects only the first page of players, however. Once you’ve created the relative selections for the stats you want, you’ll need to take one final step to get ParseHub to go through all the pages of QB’s using pagination.

Step 2: Scrape stats from all of the pages

- Click on the 'plus' button next to the 'Select page' command.

- Add a Select command and click on the 'Next' button on the top right corner of the table.

- Name this selection next.

- Create a Conditional command to ensure that the selected link is actually the 'next' button. Enter $e.text 'next'.

Note: This is not usually required; the NFL's website just works differently than most. Usually, you would just select the “next” button, and use the Click tool to go to the next page. - Open the command menu by clicking on the 'plus' button next to the Conditional command, and add a Click command.

- ParseHub naturally navigates by “clicking”, which is what we want. Choose to Go to Existing Template main_template. This is because you want ParseHub to do the exact same thing that we instructed it to do on this page on the other pages.

Run your project and download your data

- Click on the 'Get Data' button

- Click on the 'Run' button

- Click on the 'Save and Run' button

- Wait for ParseHub to extract all of the data from the page

- When you see 'CSV' or 'JSON' appear under the 'Actions' heading - click to download your data.

- You will also get an email when your project is finished scraping data. There will be a link to your data.

And there! You’re all done. Run your project, extract your data, and you’ll have all the stats you want at your fingertips.

Step 3: Set up the ParseHub API in Google Sheets

To upload your own data into a Google Doc instead of downloading data in Excel from the ParseHub extension just one time, use the IMPORTDATA function to import the data into Google Sheets.

Every time the project runs and scrapes the website, this Google Sheet will be uploaded with new data. You can also schedule ParseHub to run and grab your data consistently. When ParseHub scrapes data on a schedule, the data in your Google Doc will also be refreshed.

1. Find your API key and project token:

- Open the project we just worked on

- Find your project token in the 'Settings' tab of the project

- Save the project to see your Project Token.

- Find your API key by click on the 'profile' icon in the top-right corner of the app.

Click 'Account' and you will see your API key listed

2. Open Google Sheets and create your IMPORTDATA function

- Open a new Google Sheet

- Click on the A1 cell and type in =IMPORTDATA()

- In the =IMPORTDATA() function create your URL like the following:

=IMPORTDATA('https://www.parsehub.com/api/v2/projects/PROJECTTOKEN/lastreadyrun/data?apikey=API_KEY&format=csv')

- Replace the PROJECT_TOKEN with the actual project token from the 'Settings' tab of your project.

- Replace the API_KEY with the API key from your account.

We created this URL based on the ParseHub API reference. Take a look at it for more information.

Web Scraper Excel Vba

If you did everything correctly, you should see the data from your project appear almost immediately.

Scrape Html Excel

ParseHub can handle much more complex projects and larger data sets, and you can set up any kind of project you want using this or similar methods. Good luck and happy Fantasy-ing!

Web Scraper To Excel Formula

[This post was originally written on March 16, 2015 and updated on August 6, 2019]